One of many seminal insights of synthetic intelligence work previously decade is that very massive AI packages include smaller sections inside them that may do the work of the whole program with much less reminiscence and fewer operations, thereby rushing up efficiency and lowering power use.

That perception, mostly known as the “lottery ticket speculation,” for a well-known paper in 2019 by students Jonathan Frankle and Michael Carbin (then at MIT, at present at database firm DataBricks), is now being put to more and more sensible use as firms discover methods to shrink down AI to suit on fewer GPU chips and with much less reminiscence and bandwidth wanted.

Additionally: Transfer over Gemini, open-source AI has video tips of its personal

In a paper launched final week by a crew of students — from Meta’s AI lab, MIT, Cisco Methods, and start-up Zyphra — eradicating as a lot as half of Meta’s open-source Llama 2 massive language mannequin minimize the quantity of reminiscence wanted by three quarters, with the end result that this system may very well be run on a consumer-grade Nvidia or AMD GPU fairly than an enormous rack of servers.

“We are able to take away a considerable fraction of the deepest layers from fashions with minimal degradation in downstream efficiency, write Andrey Gromov and colleagues within the paper, considerably mysteriously titled “The Unreasonable Ineffectiveness of the Deeper Layers” and posted on the arXiv pre-print server.

For Llama 2, the authors write, “we will remove as much as roughly half of the layers earlier than the efficiency collapses.”

The reference to “deep layers” refers back to the latter elements of a neural community. Think about a neural community as ranks of musicians in a marching band. The route of marching is the way in which the entire enterprise flows by the info, if you’ll. On the entrance of the band could be smaller brass devices similar to trumpets; on the center of the pack, trombones and tubas; and on the again, the “deep” half, could be percussion devices similar to drums of varied sizes and symbols.

What Gromov and crew are seeing is that the drums and cymbals, and maybe even some tubas, are making no discernible contribution to the sound. They’re there however ineffectual; all of the output that issues is within the smaller brass and perhaps a number of the tubas. It is as for those who may take away a great chunk of the musicians — simply do with out them — and have a extra environment friendly band.

Additionally: Generative AI fails on this quite common potential of human thought

In precise neural networks, together with generative AI packages similar to OpenAI’s GPT-4, as a substitute of rows of musicians, you might have successive layers of neural community “parameters” or “weights” — mathematical values that successively remodel the enter knowledge by multiplying and summing it up, after which producing the output, i.e., the prediction.

The experimental method taken by Gromov and crew is to “prune” layers of the community to see what eradicating them does.

They begin by constructing on insights from different students who’ve tried to take aside OpenAI’s GPT to see what’s making it tick. For instance, a 2022 research by Kevin Meng and crew at MIT’s Laptop Science and Synthetic Intelligence Laboratory used a wide range of strategies to seek out out which GPT layers appear to include data of a factual nature. By following the “data circulation,” Meng and colleagues deduced the information are normally within the “center” layers of a deep neural community.

Additionally: The very best AI chatbots: ChatGPT is not the one one value making an attempt

Constructing on that perception, Gromov and crew hypothesize that eradicating the deep layers — the percussion and a few tubas — ought to have little impact on benchmark checks of AI ability that giant language fashions use, similar to query answering. They go about that in two steps.

First, they fight a complicated method, which entails measuring which layers are most related, and dropping ones that appear so as to add little. It is as for those who requested one in all two rows of trumpeters to go away. With every pruning step, they repeatedly check how the modified community performs on checks similar to query answering and a primary check of “predicting the subsequent token” that is widespread for generative AI.

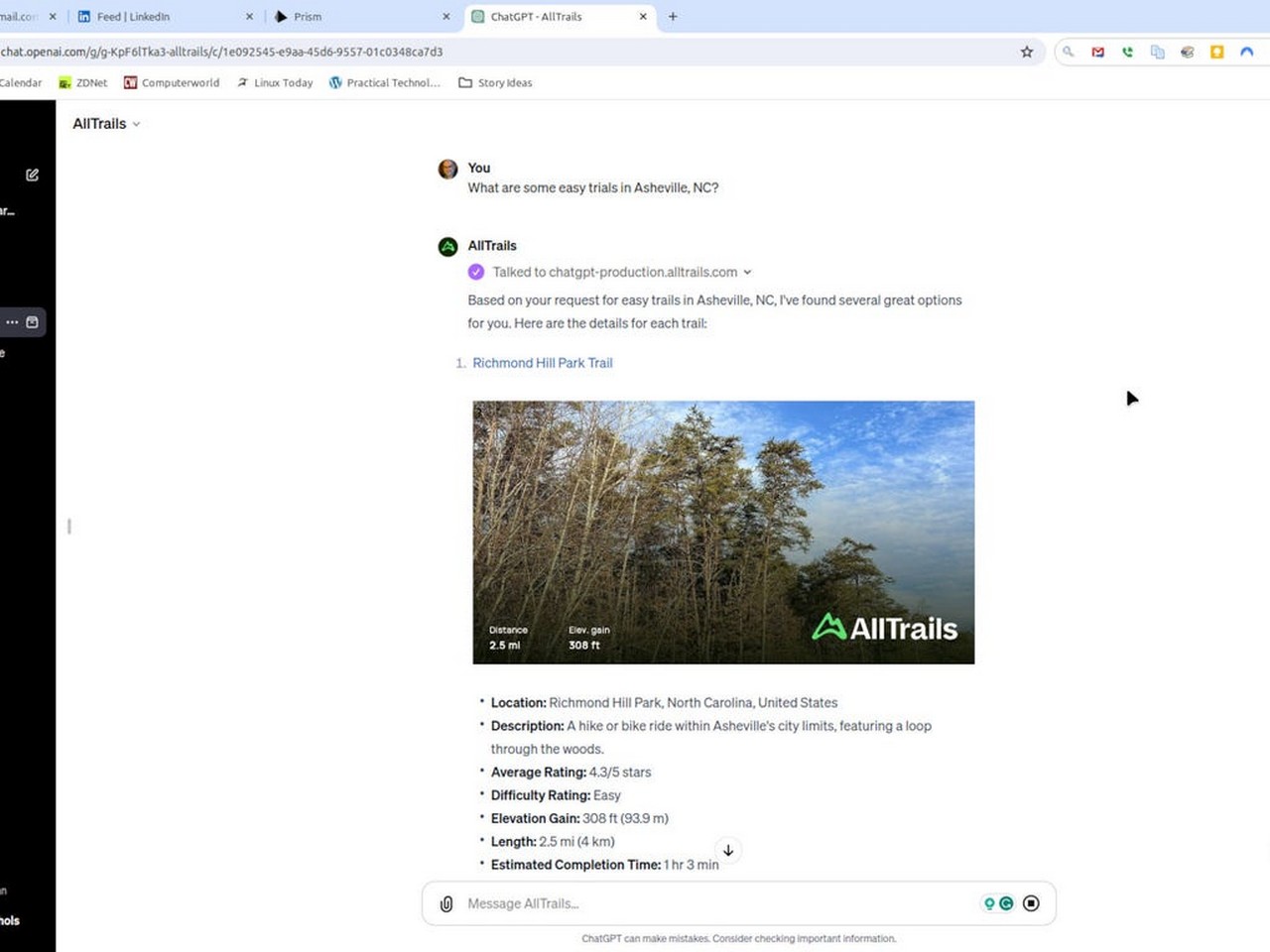

Blocks of a Transformer-based language mannequin include successive layers. The Meta crew examined whether or not eradicating layers beginning on the ultimate, or deepest, layers of the community, would have an effect on efficiency.

Meta

Then they fight an excellent less complicated method: successively eradicating layers ranging from the again of the neural web. It seems that within the second case, the less complicated case, all they should do is apply somewhat re-training of the remaining layers, by way of what’s known as fine-tuning, to keep up efficiency at a comparatively fixed stage.

Layers of a neural web may be eliminated as much as about half, as proven within the blue and black strains, and the accuracy, left, stays about the identical because the baseline, the conventional, untouched neural web. Previous about forty-five p.c of layers eliminated, the neural web plunges in accuracy.

Meta

Gromov and crew discover that their pruned neural nets rating simply in addition to the unique model. That means that “the important information required to attain a mannequin’s prime rating is not eliminated by important layer elimination – despite the fact that the fraction may be fairly massive(!) – till finally that information is misplaced at a important model-dependent threshold.”

The findings of Gromov and crew ship excellent news and dangerous information.

Additionally: 2024 would be the 12 months AI learns within the palm of your hand

On the one hand, their findings imply that giant language fashions can dramatically shrink down within the computing they want. “Particularly, the launched model of Llama-2-70B spans 140 GB of reminiscence and consumes roughly 3 × 1010 FLOPs [floating-point operations per token],” write the authors.

“With 4-bit quantization [a reduction in the precision of the numbers to save space], and a layer-pruning fraction of fifty%, the mannequin matches in roughly 17.5 GB of reminiscence and requires roughly 1.5 × 1010 FLOPs per token. These reminiscence and compute necessities allow open-weight state-of-the-art fashions to be run and even fine-tuned effectively on consumer-level GPUs with none CPU off-loading and with solely minor efficiency trade-offs.”

Additionally: How LangChain turns GenAI right into a genuinely helpful assistant

That is a pleasant effectivity enhance, however, here is the dangerous information: The truth that a lot may be pared away with such a pruning implies there may very well be loads in a neural community that is being underutilized. Gromov and crew are left with the open query of whether or not “present pre-training strategies should not correctly leveraging the parameters within the deeper layers of the community or that the shallow layers play a important position in storing information.”

To know the reply to that query, extra analysis is required with extra intensive checks of benchmark duties, to see if different challenges fail in another way than primary question-answering.